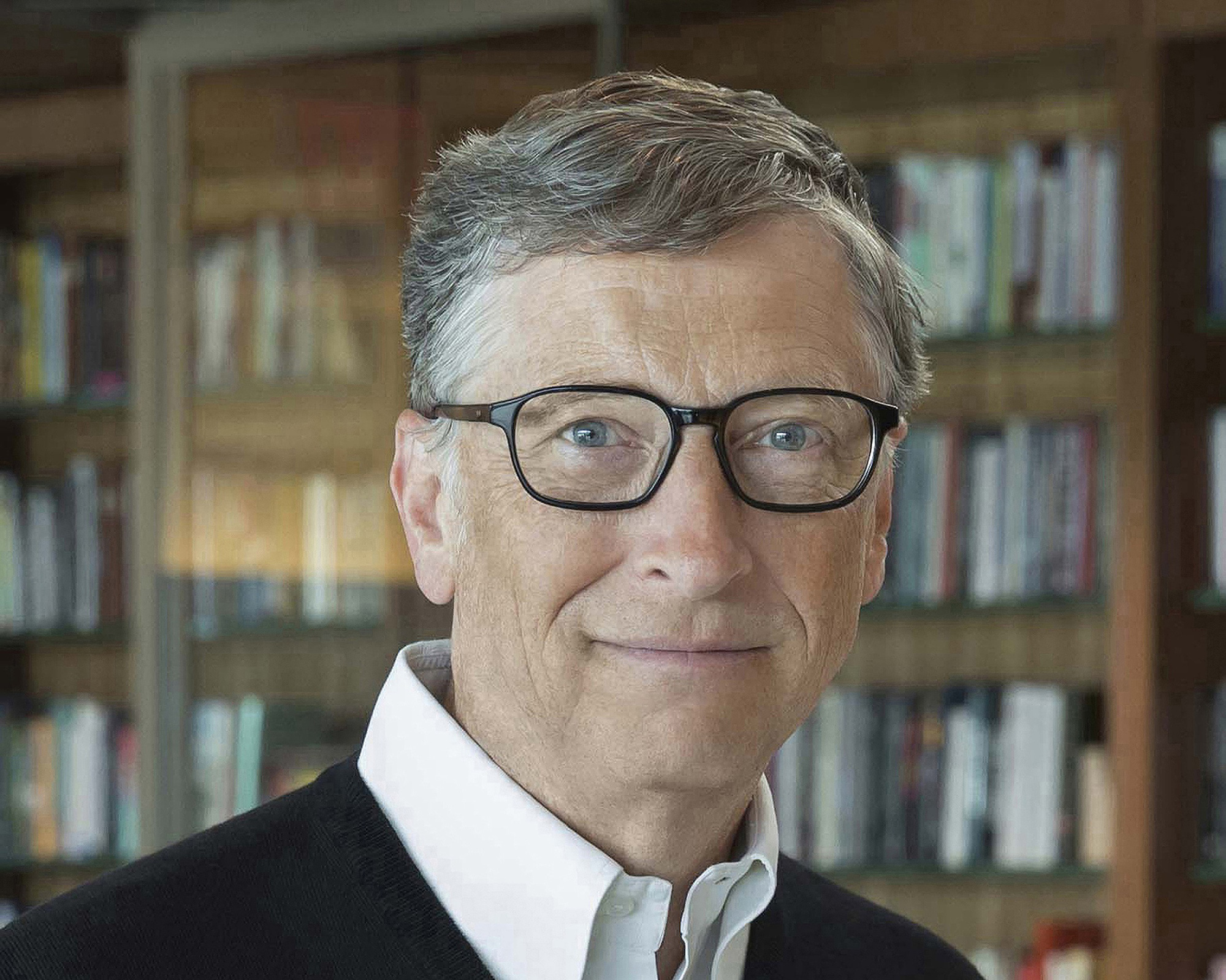

Bill Gates, the renowned co-founder of Microsoft and philanthropist, has become one of the most prominent voices in the technology industry raising alarms about the potential dangers of artificial intelligence (AI).

While many tech enthusiasts and Silicon Valley optimists continue to hail AI as a transformative force capable of solving some of humanity’s most pressing problems, Gates has consistently warned that without proper oversight and ethical frameworks, AI could be just as dangerous as it is beneficial.

His cautionary stance on the matter has sparked significant debates among tech developers, policymakers, and global leaders, who are now increasingly considering the complex challenges posed by the rise of AI technologies.

For years, Gates has advocated for a careful, measured approach to AI development. Unlike the more optimistic predictions made by other technology moguls, Gates has pointed out that the power of AI, if not responsibly managed, could lead to disastrous consequences.

In his view, AI’s potential to revolutionize industries, from healthcare to transportation, comes with an equally significant risk of misuse. He has compared AI’s transformative potential to that of nuclear energy, a technology that, while capable of providing immense benefits, also has the potential for catastrophic consequences if mishandled.

Gates' comparison between AI and nuclear energy highlights his concern that, much like the split atom, AI has the potential to be a double-edged sword.

If used for good, AI could help solve complex problems such as climate change, improve healthcare outcomes, and optimize industries in ways that were previously unimaginable.

However, if misused or allowed to develop unchecked, AI could lead to widespread societal harm. From autonomous weapons to mass surveillance systems, the potential for AI to infringe upon privacy and civil liberties, as well as its use in perpetuating biases and inequalities, presents a host of risks that cannot be ignored.

As Gates has become increasingly vocal on the issue, his warnings about AI have captured the attention of global leaders and policymakers. In various interviews and public forums, he has stressed the need for governments and companies to implement strong ethical frameworks and regulatory oversight before AI is fully integrated into society.

He has called for proactive regulation that can guide the development of AI in a way that maximizes its benefits while minimizing its risks. Gates has also advocated for increased transparency in AI systems, ensuring that their decision-making processes are understandable and accountable to the public.

The urgency of Gates’ warnings about AI has gained even more relevance as the technology continues to evolve at an accelerated pace. With advances in machine learning, neural networks, and natural language processing, AI systems are becoming more sophisticated and capable of performing tasks that were once thought to be uniquely human.

As AI systems continue to learn, adapt, and make decisions on their own, it becomes increasingly difficult to predict how they will behave in complex, real-world scenarios. Gates has been a vocal proponent of developing international collaborations to establish global standards and protocols for AI development.

The conversation around AI safety and regulation has gained traction in recent years, especially as tech companies and governments recognize the need to address the ethical implications of AI.

Gates is not alone in his concerns. Many prominent figures in the tech industry, including Elon Musk and Timnit Gebru, have echoed similar sentiments, urging for greater oversight and regulation of AI technologies.

Musk, who has been an outspoken critic of AI’s potential dangers, has often warned that AI could be more dangerous than nuclear weapons, calling for stricter regulation to prevent a technological arms race.

Similarly, AI researcher Timnit Gebru has emphasized the importance of ethical considerations in AI development, advocating for more diversity and transparency in the field to avoid reinforcing harmful biases.

However, despite these growing calls for caution, there remains a significant divide between those who believe that AI should be regulated tightly and those who argue that strict regulations could stifle innovation.

Many in the tech industry, particularly within Silicon Valley, are still convinced that the benefits of AI far outweigh the risks, and that excessive regulation could hinder progress and prevent society from fully realizing AI’s potential.

They argue that AI, much like any other powerful technology, can be developed safely and responsibly through collaboration, open dialogue, and continuous improvement.

This divide has led to increasing pressure on policymakers to strike a balance between fostering innovation and ensuring that AI is developed ethically and responsibly. In response, some governments have started to take steps toward establishing AI regulations.

For instance, the European Union has proposed a comprehensive set of regulations known as the Artificial Intelligence Act, which aims to ensure that AI is used in a way that respects fundamental rights and freedoms.

Similarly, in the United States, the Biden administration has announced plans to develop a national AI strategy that focuses on building a responsible and trustworthy AI ecosystem.

Despite these efforts, many experts argue that the pace of AI development is outstripping the ability of governments and regulatory bodies to keep up. As AI continues to advance, the questions surrounding its impact on privacy, security, and social equity become more urgent.

Gates himself has acknowledged that while progress is being made, there is still much work to be done to ensure that AI is developed in a way that benefits all of humanity. He has stressed the importance of building AI systems that are transparent, explainable, and aligned with human values.

The need for global cooperation in managing AI’s risks is another area where Gates has been vocal. He has called for countries to work together to create international standards for AI that can guide its development and application across borders.

Given the global nature of AI technology, Gates believes that cooperation between governments, businesses, and civil society is essential to ensuring that AI is used for the common good.

He has argued that no single country or company can regulate AI alone, and that global collaboration is necessary to prevent the emergence of dangerous and unregulated AI systems.

As the conversation around AI regulation and ethics continues to evolve, Bill Gates remains one of the most influential voices in the tech world advocating for a balanced, ethical approach to AI development.

His warnings about the dangers of unchecked AI, combined with his calls for strong oversight and international cooperation, have sparked crucial conversations about how to manage the risks associated with this rapidly advancing technology.

While Gates’ concerns are certainly not new, the urgency of his message has only grown as AI continues to permeate every aspect of our lives.

In conclusion, Bill Gates’ role in shaping the conversation around artificial intelligence is invaluable. His vision for AI, not as a panacea but as a powerful tool that requires careful management and oversight, serves as a crucial guide for the future of technology.

As AI continues to evolve, the need for responsible innovation becomes even more apparent. Gates’ warnings and calls for global cooperation are a reminder that while technology has the potential to revolutionize the world, it is up to humanity to ensure that its power is used wisely and ethically.

The next steps in the development of AI will require a delicate balance of innovation, regulation, and ethics—something Gates believes must be achieved through collaboration and careful thought.

The future of AI, as Gates has repeatedly emphasized, should be one where its transformative power is harnessed for the greater good, ensuring that society as a whole benefits from its advancements.

-1751966491-q80.webp)