Apple, a company long known for its firm stance on user privacy, has ignited a firestorm of controversy with a bold but polarizing initiative: the proposed implementation of a system to detect and report child sexual abuse material (CSAM) stored in users’ iCloud photo libraries.

The plan, announced with a carefully worded explanation of intent and protections, aims to leverage a combination of image-matching technology and on-device processing to identify known abusive content and alert law enforcement while maintaining privacy. Yet the reaction has been anything but unified.

Supporters hail the effort as a moral imperative, arguing that Apple is finally taking a stand in protecting the most vulnerable. Detractors, including privacy advocates and cryptographers, warn of a dangerous precedent that could erode digital privacy forever, not only within Apple’s ecosystem but across the tech industry as a whole.

At the heart of the system is NeuralHash, a technology Apple claims can scan photos on a user's device, generate a unique hash, and compare it to a database of known CSAM maintained by organizations like the National Center for Missing and Exploited Children (NCMEC).

If a certain threshold of matches is triggered, Apple reviews the flagged images manually and, if confirmed, forwards the case to law enforcement. Apple emphasized that the system only activates when iCloud Photos is enabled and that it uses multiple layers of encryption and approval to minimize errors and abuse.

Nevertheless, critics were quick to push back. Privacy experts argue that while the intention may be noble, the tool itself opens the door to mass surveillance.

Once such a system is in place, they contend, governments around the world could pressure Apple to expand its use—not just for CSAM, but for other forms of content deemed illegal or politically sensitive.

Edward Snowden, the former NSA contractor turned whistleblower, labeled Apple’s move as “a dangerous shift” in the balance of digital power. Civil liberties organizations like the Electronic Frontier Foundation (EFF) echoed these concerns, warning that the mechanism—no matter how well-intentioned—establishes a framework ripe for authoritarian exploitation.

Encryption advocates worry that once Apple concedes the ability to scan private content on behalf of government objectives, it becomes difficult to draw a firm line limiting the scope. Today it may be CSAM, tomorrow it may be political dissent, copyrighted materials, or even religious expression in oppressive regimes.

Apple, for its part, attempted to assuage fears through extensive documentation and interviews. Company representatives clarified that the hash-matching process is fundamentally different from scanning photos directly, and that no actual image is reviewed or analyzed unless a clear and repeated match to known CSAM content is detected.

Apple emphasized that it would reject requests from governments to expand the program’s reach, citing its global leadership in privacy standards. Still, these assurances have done little to quell skepticism.

Technologists argue that if the scanning capability exists, it can be turned against users with or without Apple’s consent. Critics point to China, Russia, and other authoritarian governments as examples of how quickly tools intended for protection can be repurposed for control.

In the United States, reactions are split along ideological lines. Conservative commentators largely support the initiative as a moral necessity, praising Apple for finally aligning its tools with law enforcement in the fight against child exploitation.

Some progressive voices, while acknowledging the seriousness of CSAM, caution against any compromise to end-to-end encryption or device-level autonomy. Tech industry leaders are watching closely.

Facebook (Meta), Google, and Microsoft already employ various forms of server-side CSAM detection, but Apple’s move into on-device scanning represents a new frontier. If it succeeds, other companies may follow. If it fails, it may set back the cause of digital trust for years.

The stakes are enormous. Apple has long used privacy as a competitive differentiator, famously challenging the FBI in 2016 by refusing to unlock a terrorist’s iPhone, framing its stance as a principled defense of user rights.

With the CSAM initiative, Apple finds itself on the defensive. Hashtags like #ApplePrivacyBetrayal and #iSpyApple have trended online. Influential voices in cybersecurity warn that the company is crossing a line from trusted steward to surveillance gatekeeper.

Meanwhile, survivor advocacy groups have applauded Apple for using its power to confront a problem that too often hides in the shadows. For them, the choice is clear: no child’s safety should be compromised in the name of abstract privacy.

Some of the most challenging criticisms come from within Apple’s own community. Current and former employees have reportedly raised concerns internally about the technical and ethical risks of the project.

A group of staff engineers circulated a letter warning that the feature could lead to mission creep. They questioned whether Apple would have the political will to say no if foreign governments demanded expansion.

Similar concerns were raised about the impact on Apple’s reputation in regions with strong privacy protections, such as the European Union, where legal and regulatory challenges could arise.

GDPR compliance, data retention rules, and differing standards for content moderation all complicate Apple’s ability to roll out a one-size-fits-all global policy.

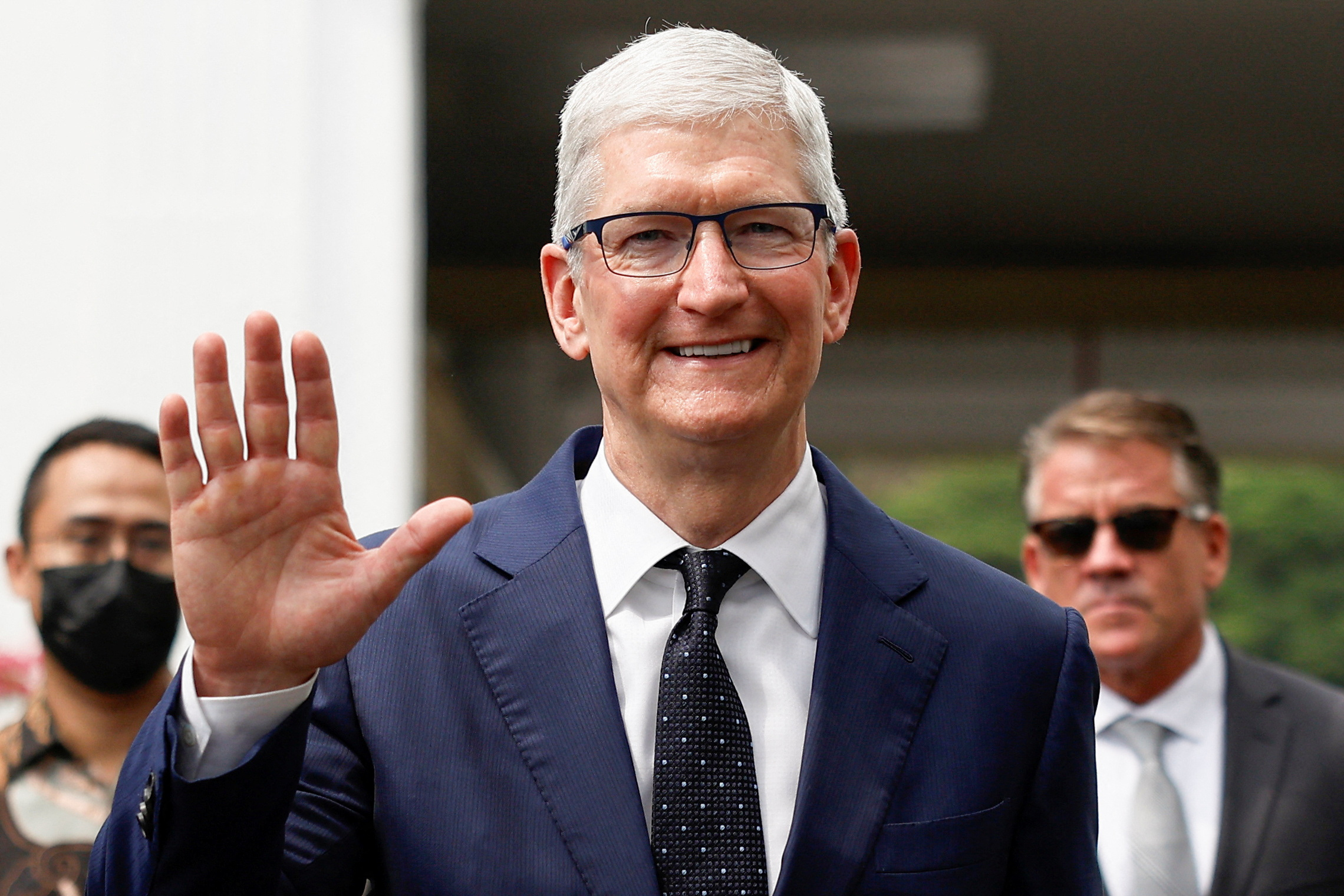

As public debate intensifies, the issue has become a test of Apple’s leadership and credibility. Tim Cook, who has championed privacy and human rights during his tenure, faces a daunting balancing act: how to protect children from harm without compromising the very liberties Apple claims to defend.

Thus far, Cook has remained measured in public remarks, reiterating the company’s commitment to both safety and privacy. In an internal memo, he is said to have expressed confidence that the initiative is both technically sound and ethically necessary. Whether that confidence is shared by users remains uncertain.

Several Apple partners have remained silent, while others have expressed cautious support. Law enforcement agencies worldwide have welcomed the move, stating that tech companies must take more responsibility in helping identify and stop the spread of illegal material.

Still, questions remain about oversight, appeals, and the accuracy of the hash-matching process. If an innocent user is flagged due to a false positive, what recourse do they have? Can the system be audited independently? How transparent will Apple be with the public and with regulators? These unresolved issues continue to fuel opposition.

Some analysts speculate that Apple’s long-term goal is not simply content moderation, but broader AI-driven device intelligence. By establishing a precedent for on-device computation and ethical scanning, Apple may be laying the groundwork for future applications of AI in security, health, and personalization.

If successful, Apple could emerge as the leader in trusted AI deployment—balancing innovation and regulation in ways that competitors struggle to match.

But if the program falters, it could mark a turning point where users begin to question whether the promise of Apple’s ecosystem is worth the price of invisible surveillance.

In the end, Apple’s CSAM detection plan is not just about child protection or privacy. It is about trust. In a digital world increasingly defined by power asymmetries, opaque algorithms, and conflicting values, users must decide whether they believe Apple is acting as protector or as enforcer.

And Apple must decide whether its next great innovation will be remembered as a shield—or as a wedge that split the foundation of digital liberty apart.

-1743474541-q80.webp)

-1747991391-q80.webp)

-1747898674-q80.webp)